AI ディレクトリ : AI Developer Tools, AI Tools Directory

What is Local AI Playground?

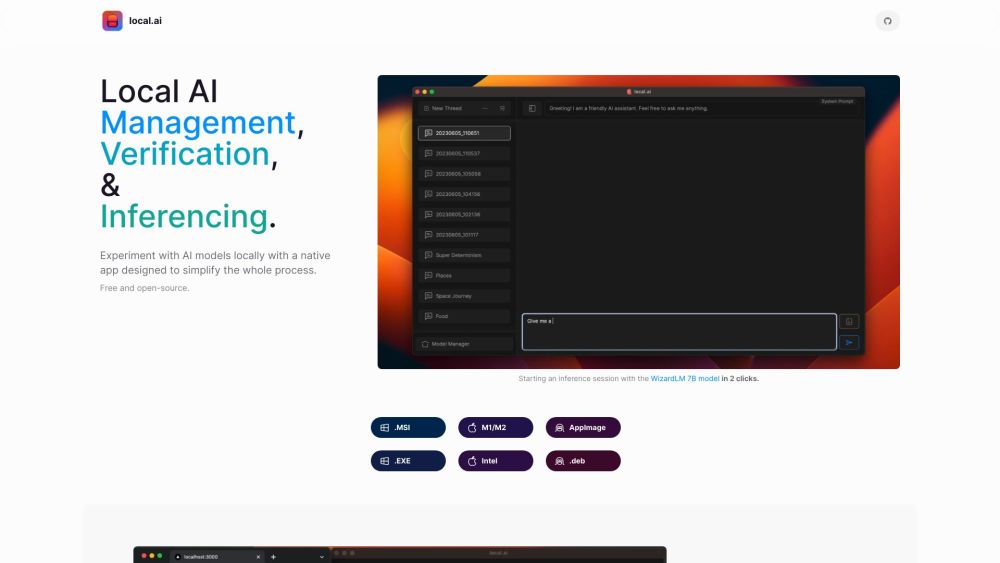

The Local AI Playground is a native app designed to simplify the process of experimenting with AI models locally. It allows you to perform AI tasks offline and in private, without the need for a GPU.

How to use Local AI Playground?

To use the Local AI Playground, simply install the app on your computer. It supports various platforms such as Windows, Mac, and Linux. Once installed, you can start an inference session with the provided AI models in just two clicks. You can also manage your AI models, verify their integrity, and start a local streaming server for AI inferencing.

Local AI Playground's Core Features

CPU inferencing

Adapts to available threads

GGML quantization

Model management

Resumable, concurrent downloader

Digest verification

Streaming server

Quick inference UI

Writes to .mdx

Inference params

Local AI Playground's Use Cases

Experimenting with AI models offline

Performing AI tasks without requiring a GPU

Managing and organizing AI models

Verifying the integrity of downloaded models

Setting up a local AI inferencing server

FAQ from Local AI Playground

What is Local AI Playground?

The Local AI Playground is a native app designed to simplify the process of experimenting with AI models locally. It allows you to perform AI tasks offline and in private, without the need for a GPU.

How to use Local AI Playground?

To use the Local AI Playground, simply install the app on your computer. It supports various platforms such as Windows, Mac, and Linux. Once installed, you can start an inference session with the provided AI models in just two clicks. You can also manage your AI models, verify their integrity, and start a local streaming server for AI inferencing.

What is the Local AI Playground?

The Local AI Playground is a native app designed to simplify the process of experimenting with AI models locally. It allows you to perform AI tasks offline and in private, without the need for a GPU.

How do I use the Local AI Playground?

To use the Local AI Playground, simply install the app on your computer. It supports various platforms such as Windows, Mac, and Linux. Once installed, you can start an inference session with the provided AI models in just two clicks. You can also manage your AI models, verify their integrity, and start a local streaming server for AI inferencing.

What are the core features of the Local AI Playground?

The core features of the Local AI Playground include CPU inferencing, adaptability to available threads, GGML quantization, model management, resumable and concurrent downloader, digest verification, streaming server, quick inference UI, writing to .mdx format, and inference parameters.

What are the use cases for the Local AI Playground?

The Local AI Playground is useful for experimenting with AI models offline, performing AI tasks without requiring a GPU, managing and organizing AI models, verifying the integrity of downloaded models, and setting up a local AI inferencing server.