KI-Verzeichnis : AI Agent, AI API, AI Developer Tools, AI Models, Large Language Models (LLMs)

What is MakeHub?

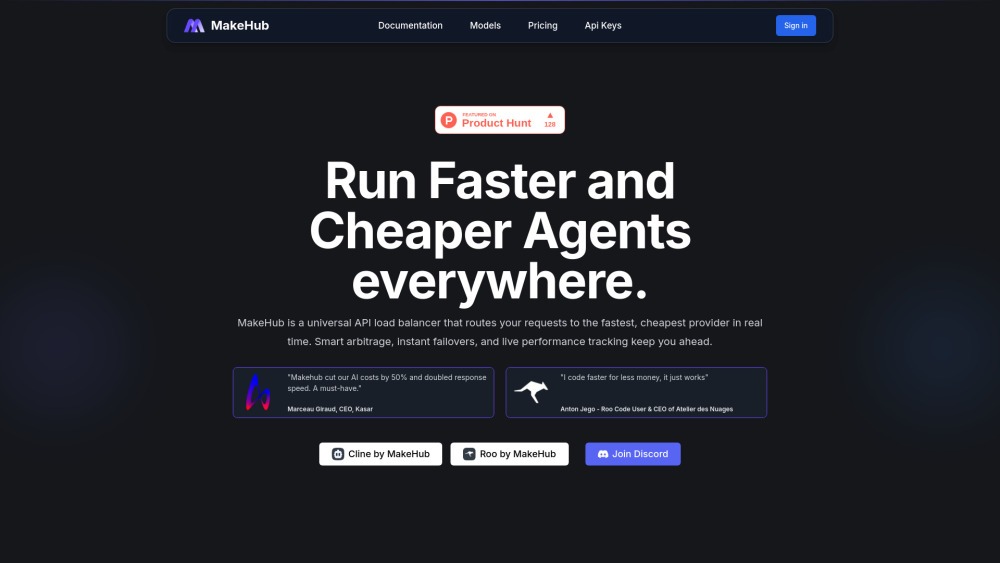

MakeHub is a universal API load balancer designed to dynamically route AI model requests (such as GPT-4, Claude, and Llama) to the best providers (including OpenAI, Anthropic, and Together.ai) in real-time. It offers an OpenAI-compatible endpoint, a single unified API for both closed and open LLMs, and runs continuous background benchmarks for price, latency, and load. This system ensures optimal performance, significant cost savings, smart arbitrage, instant failovers, and live performance tracking for AI agents and applications.

How to use MakeHub?

To use MakeHub, you choose the desired AI model through its single unified API. MakeHub then intelligently routes your request to the best available provider based on real-time performance metrics, including speed, cost, and uptime. This allows users to run their coding agents and AI applications faster and cheaper without managing multiple provider APIs.

MakeHub's Core Features

OpenAI-compatible endpoint

Single unified API for multiple AI providers

Dynamic routing to the cheapest and fastest provider

Real-time benchmarks (price, latency, load)

Smart arbitrage

Instant failover protection

Live performance tracking

Intelligent Cost Optimization

Universal Tool Compatibility

Support for closed and open LLMs

MakeHub's Use Cases

Reducing AI API costs by up to 50%

Doubling AI model response speed

Achieving 99.99% uptime and consistent response times for AI applications

Eliminating dependency on a single AI provider to avoid performance issues and downtime

Enabling faster and cheaper development for coding agents

Optimizing AI infrastructure for performance and budget constraints

FAQ from MakeHub

What is MakeHub?

How does MakeHub help reduce AI costs?

How does MakeHub improve response speed?

Which AI models and providers does MakeHub support?

What is MakeHub's pricing model?

MakeHub Company

MakeHub Company name: MakeHub AI .

MakeHub Login

MakeHub Login Link: https://www.makehub.ai/dashboard/api-security

MakeHub Twitter

MakeHub Twitter Link: https://x.com/MakeHubAI

MakeHub Github

MakeHub Github Link: https://github.com/MakeHub-ai

FAQ from MakeHub

What is MakeHub?

MakeHub is a universal API load balancer designed to dynamically route AI model requests (such as GPT-4, Claude, and Llama) to the best providers (including OpenAI, Anthropic, and Together.ai) in real-time. It offers an OpenAI-compatible endpoint, a single unified API for both closed and open LLMs, and runs continuous background benchmarks for price, latency, and load. This system ensures optimal performance, significant cost savings, smart arbitrage, instant failovers, and live performance tracking for AI agents and applications.

How to use MakeHub?

To use MakeHub, you choose the desired AI model through its single unified API. MakeHub then intelligently routes your request to the best available provider based on real-time performance metrics, including speed, cost, and uptime. This allows users to run their coding agents and AI applications faster and cheaper without managing multiple provider APIs.

How does MakeHub help reduce AI costs?

MakeHub intelligently routes requests to the most cost-effective provider at any given moment, with users reporting up to 50% cost reduction.

How does MakeHub improve response speed?

By dynamically routing requests to the fastest available provider and offering instant failovers, MakeHub can double response speeds and ensure consistent performance.

Which AI models and providers does MakeHub support?

MakeHub supports over 40 SOTA models from 33 providers, including models from OpenAI, Anthropic, Together.ai, Google, Mistral, DeepSeek, and more.

What is MakeHub's pricing model?

MakeHub operates on a 'Pay As You Go' model with a flat 2% fee on credit refuel. Payment infrastructure fees are separate.